How to Deconstruct a Search Engine

1. Crawl millions of search result pages.

2. Catalog them based on thousands of ranking factors.

3. See behind the curtain.

The Common Problem

The common problem with SEO comes from a common way of doing things – when people blindly accept what “authorities” say because they have no other way to generate the data themselves.

Even the most seasoned pros can fall victim to a messy game of SEO telephone…

- Finding tips in blog posts

- Trading trends in a Slack channel

- Attending talks at industry conferences

- Speaking to your “friend who used to work at Google”

For many, this results in a surface-level understanding of algorithms and fingers-crossed SEO strategy. Not to mention that much of the commonly-toted advice is ineffective. Or flat-out wrong.

It’s a good thing you aren’t like most people in SEO.

We promise, there’s a better way. And it starts with actually understanding an algorithm.

Client Experience

Our Uncommon Solution

At SearchTides, we go directly to the source, breaking down search algorithms in a quantifiable, scientific way. We then apply that knowledge to our strategies because a cutting-edge approach and relentless pursuit of staying ahead of the trends is the only way to stay on top.

Step One: Collect and Measure Data

Let’s take the most popular search algorithm: Google. What comprises the Google algorithm?

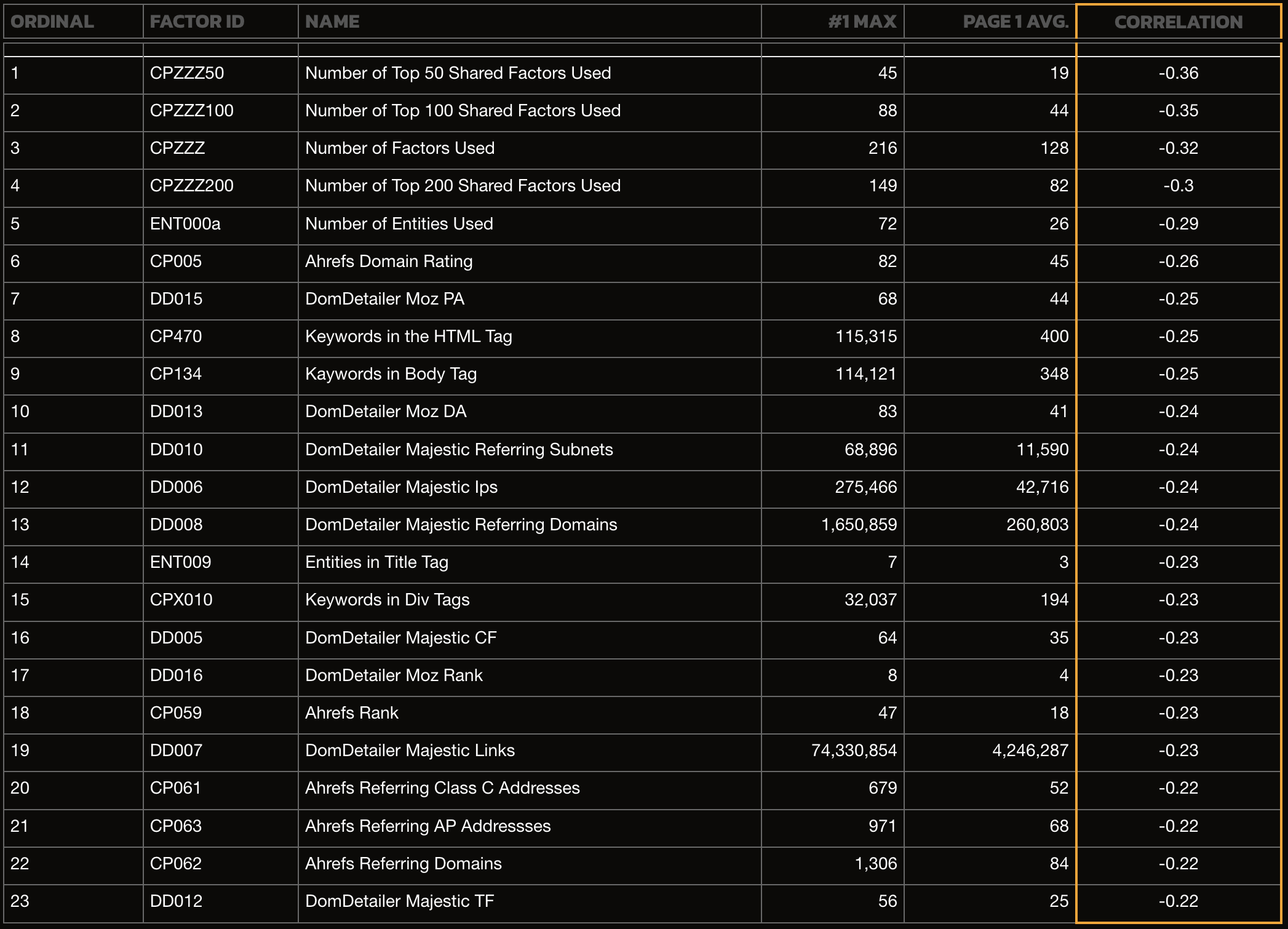

Here it is. Take it in – in all its glory.

| ORDINAL | FACTOR ID | NAME | #1 MAX | PAGE 1 AVG. | CORRELATION |

|---|---|---|---|---|---|

| 1 | CPZZZ50 | Number of Top 50 Shared Factors Used | 45 | 19 | -0.36 |

| 2 | CPZZZ100 | Number of Top 100 Shared Factors Used | 88 | 44 | -0.35 |

| 3 | CPZZZ | Number of Factors Used | 216 | 128 | -0.32 |

| 4 | CPZZZ200 | Number of Top 200 Shared Factors Used | 149 | 82 | -0.3 |

| 5 | ENT000a | Number of Entities Used | 72 | 26 | -0.29 |

| 6 | CP005 | Ahrefs Domain Rating | 82 | 45 | -0.26 |

| 7 | DD015 | DomDetailer Moz PA | 68 | 44 | -0.25 |

| 8 | CP470 | Keywords in the HTML Tag | 115,315 | 400 | -0.25 |

| 9 | CP134 | Kaywords in Body Tag | 114,121 | 348 | -0.25 |

| 10 | DD013 | DomDetailer Moz DA | 83 | 41 | -0.24 |

| 11 | DD010 | DomDetailer Majestic Referring Subnets | 68,896 | 11,590 | -0.24 |

| 12 | DD006 | DomDetailer Majestic Ips | 275,466 | 42,716 | -0.24 |

| 13 | DD008 | DomDetailer Majestic Referring Domains | 1,650,859 | 260,803 | -0.24 |

| 14 | ENT009 | Entities in Title Tag | 7 | 3 | -0.23 |

| 15 | CPX010 | Keywords in Div Tags | 32,037 | 194 | -0.23 |

| 16 | DD005 | DomDetailer Majestic CF | 64 | 35 | -0.23 |

| 17 | DD016 | DomDetailer Moz Rank | 8 | 4 | -0.23 |

| 18 | CP059 | Ahrefs Rank | 47 | 18 | -0.23 |

| 19 | DD007 | DomDetailer Majestic Links | 74,330,854 | 4,246,287 | -0.23 |

| 20 | CP061 | Ahrefs Referring Class C Addresses | 679 | 52 | -0.22 |

| 21 | CP063 | Ahrefs Referring AP Addressses | 971 | 68 | -0.22 |

| 22 | CP062 | Ahrefs Referring Domains | 1,306 | 84 | -0.22 |

| 23 | DD012 | DomDetailer Majestic TF | 56 | 25 | -0.22 |

Search algorithms are simply thousands of “ranking factors.” We break them down into three categories:

- On-page factors – the things happening on the page (like words).

- Off-site factors – the things happening off the website (like links).

- Technical factors – the things happening on a website that are performance (or crawl) related.

And how do we collect and measure these factors? Well, we’ve built sophisticated technology:

- We crawl millions (and millions) of search results in perpetuity.

- We catalog them based on thousands of ranking factors.

- We do that enough times to get a real understanding of what’s important across all search results.

- We do that for specific search queries which gets us query-specific data.

But there’s another key component of understanding the algorithm – results are relative. As opposed to an absolute single strategy existing across all search results, getting to number one is quite literally about beating whoever happens to be at the top in your industry.

Since their page is ultimately a summation of ranking factors (that happen to be important for a given search query), we can help you beat them.

Step Two: Stay On Top Of Algorithm Updates

Most people rely on Google’s word on what’s included in algorithm updates. But remember, we’re no longer making decisions based on what everyone else says – and that includes Google.

They don’t lie, they just don’t tell us the whole truth.

But data does.

What’s really happening with an algorithm update is a re-ordering of which ranking factors are most important. A factor that once carried significant weight can become less significant post-update.

Our ability to stay on top of the algorithm means we instantly know which factors became more or less important. Plus, it allows us to spot future trends in what Google deems important before they’re rolled out publicly. This is where our unrelenting pursuit of having the freshest, most significant data pays off, giving you a potent competitive advantage.

Step Three: Implement Informed Solutions

So what do we do with all of this data? Two things.

First, we identify and implement any immediate solutions to your SEO strategy. If there’s low-hanging fruit, we identify exactly what it is. We don’t send our clients on a 162-point goose hunt to update tags and descriptions – if the data doesn’t show it’ll make a measurable impact, we don’t waste the time.

Second, we also use the data as a map to carve out larger strategies. The factors we see gaining importance over time lead to hypotheses, which we test within our R&D department. Once confirmed, we roll out those larger recommendations to our clients. That means you’ll have a comprehensive strategy for what works at the website level: technical SEO, website architecture, recommendations on technology stacks, and so on.

We also create an iterative process for optimization at the page level. This provides a blueprint for what to optimize and helps us to inform your optimization strategy for years into the future.

STEP ONE: COLLECT AND MEASURE THE DATA

Let’s take the most popular search algorithm: Google. What comprises the Google algorithm? Here it is. Take it in – in all its glory.

Search algorithms are simply thousands of “ranking factors.” We break them down into three categories:

- On-page factors – the things happening on the page (like words).

- Off-site factors – the things happening off the website (like links).

- Technical factors – the things happening on a website that are performance (or crawl) related.

And how do we collect and measure these factors? Well, we’ve built sophisticated technology:

- We crawl millions (and millions) of search results in perpetuity.

- We catalog them based on thousands of ranking factors.

- We do that enough times to get a real understanding of what’s important across all search results.

- We do that for specific search queries which gets us query-specific data.

But there’s another key component of understanding the algorithm – results are relative. As opposed to an absolute single strategy existing across all search results, getting to number one is quite literally about beating whoever happens to be at the top in your industry.

Since their page is ultimately a summation of ranking factors (that happen to be important for a given search query), we can help you beat them.

STEP TWO: STAY ON TOP OF ALGORITHM UPDATES

Most people rely on Google’s word on what’s included in algorithm updates. But remember, we’re no longer making decisions based on what everyone else says – and that includes Google.

They don’t lie, they just don’t tell us the whole truth.

But data does.

What’s really happening with an algorithm update is a re-ordering of which ranking factors are most important. A factor that once carried significant weight can become less significant post-update.

Our ability to stay on top of the algorithm means we instantly know which factors became more or less important. Plus, it allows us to spot future trends in what Google deems important before they’re rolled out publicly. This is where our unrelenting pursuit of having the freshest, most significant data pays off, giving you a potent competitive advantage.

STEP THREE: IMPLEMENT INFORMED SOLUTIONS

So what do we do with all of this data? Two things.

First, we identify and implement any immediate solutions to your SEO strategy. If there’s low-hanging fruit, we identify exactly what it is. We don’t send our clients on a 162-point goose hunt to update tags and descriptions – if the data doesn’t show it’ll make a measurable impact, we don’t waste the time.

Second, we also use the data as a map to carve out larger strategies. The factors we see gaining importance over time lead to hypotheses, which we test within our R&D department. Once confirmed, we roll out those larger recommendations to our clients. That means you’ll have a comprehensive strategy for what works at the website level: technical SEO, website architecture, recommendations on technology stacks, and so on.

We also create an iterative process for optimization at the page level. This provides a blueprint for what to optimize and helps us to inform your optimization strategy for years into the future.

Our Solution In Action

We identify powerful correlations that don’t show up in existing tools.

Average SEO Joe tends to think about SEO as happening in one of two ways: on-page or off-page. But by measuring thousands of ranking factors across all page components, we can see correlations that exist beyond those constructs – ones that don’t appear in any set of tools.

For example, everyone thinks about using keywords on a page, or topics like keyword density, but not where and how those keywords are used across a number of the page’s different sections – or what we call “content zones.” Optimizing content zones – and maintaining a balance between them – is the key to winning ranking diversity factors.

In other words, if you’re using loads of keywords in your paragraphs and H1s/H2s, but never in your sidebar, or nav, or never using entities, it’s entirely possible on-site tools will show that you have a perfect density score when you actually have a page that’s both over and under-optimized. You need a way to measure that.

We let the data (not Google PR) tell the whole story.

In December 2022, Google released a statement about their latest algorithm update. Publicly, the update was said to be about links. Privately, the data told a radically different story.

What went unmentioned was a complete reconstruction of how Google measures entities – a change so significant that it will fundamentally impact how rankings are constructed over the next three years.

Entities are officially recognized sub-topics of a given topic on the internet. For example, in the topic of “finance,” “mortgage” would be considered an entity. So would “loan.”

In previous algorithm updates, one real measurement we saw in the data was a correlation between the number of related entities used on a page and the ranking of the page – the same way you’d use a keyword a number of times on a page.

But with the update, entities are now treated as topic groupings within a website itself. If this seems like a massive change from “how many times a keyword appears on a page,” that’s because it is.

How big of a change? Before this update, we could view entities as an apple – or even a few types of apples. But now, the significance of entities is like every type of fruit (in every form) that has ever been consumed.

And no one is talking about it.

We see into the future.

Over two years ago, we spotted a new ranking factor starting to appear in our data. It showed that there was a material difference in rankings between websites with keywords in the first 30 KB of their web page, and those without.

Here’s what that means:

- Google is looking at your source code.

- If you have a bloated codebase with no human-readable text, it’s entirely possible that the first 30KB of your source code has zero words, let alone keywords.

So, how do we gain this insight from the data?

It shows up in the form of a ranking factor – one that previously held little to no weight – that begins to move its way up the priority list.

But here’s the thing, these shifts don’t happen overnight. They happen slowly. Our ability to monitor these small changes means we can make assertions about the direction Google wants to move in.

And because we know when changes are coming, we’re able to do two major things:

- According to the trend we’re seeing, we can make immediate adjustments to your website.

- We can form greater hypotheses as to why something is happening before you’ll need it so you can start having informed conversations years in advance.

And, this is just one example of the trends we’re seeing in the data.

SEO is a zero-sum game

The moment you get a leg up on the competition, someone else will try to return the favor.

And so the cycle repeats.

At SearchTides, these are the two things we care about most:

- Getting you the best information possible as it applies to your unique situation.

- Applying it correctly so we can roll it out for you quickly and at scale.

With Google continuing to forge down a “winner takes all” pathway, the threshold to be competitive gets higher and higher.

Data, and the knowledge of how to use it, is the only way to establish a significant competitive advantage.

Let’s future-proof your SEO strategy for long-term growth.